[4] Web Scrapers, a Guide and a Horror Story

By raxmur

A classic website can be thought of as a program that takes a set of information from its server, assembles it as needed and sends it back the browser. A good API (Application Programming Interface) is one of the most useful features a website could possibly have, because it exposes the raw information instead of wrapping it in its own UI, so that you get to decide how to assemble it and what to make of it.

Some websites unfortunately lack this great feature, perhaps because of developer laziness, incompetence or simply lack of interest. This is where the scraper comes in.

A scraper is like an API that you have to write yourself: it disassembles the webpages, extrapolates the required information and collects it into a data structure that you can work with. Scrapers might not be as powerful or as clean as normal APIs, but they’re often the only way to get access to information programmatically.

Tools of the trade

To write a scraper, you’re gonna need a few things:

- Knowledge of a programming language. I choose Ruby because it’s concise, has a REPL, and great libraries, but you can choose any language you see fit.

- A web browser with developer tools. All major browsers have these, so just pick your poison.

- curl. This is an invaluable tool for whoever works with the internet.

- A library to make HTTP requests. I use Net:HTTP because, well, it ships with Ruby.

- A good library to access XML, possibly through XPath. This is gonna be the deal breaker for the language you’re gonna use, because XML is a mess and you will have to deal with malformed XML. Oga is what I used. (Some use regular expressions to parse XML. I do not recommend it.)

- Some Javascript knowledge. Single-page websites often use their own internal REST API to fetch the information, so you may have to read through some frontend code.

A simple example

Writing a scraper is made up of two phases: first, you’ll need to understand how the website works and where to poke to obtain the information you want, then, you’ll have to parse the resulting webpage and select the interesting bits.

Let’s take lainchan as an example: let’s ignore that it has a perfectly good JSON API and say you want to write something that consumes the posts in a thread.

First of all, pay attention to the URLs. Websites are programs after all, so they (should) work in a logic way: try to find patterns by looking at the URL and the function of the page you’re on.

Let’s take lainchan, for example. In lainchan’s case, this is very simple: the first element in the path is the board ID (λ, cyb, lit or what have you). Then, in the index pages, the second is the page number, while in a thread it’s just a prefix, and the third is the ID of the thread. You can write some functions to generate the correct URLs, if you want:

BASE = 'https://lainchan.org/'

def index(board, page)

"#{BASE}#{board}/#{page == 1 ? "index" : page}.html"

end

def thread(board, id)

"#{BASE}#{board}/res/#{id}.html"

end

Now that we got the logic down, we can begin the second phase. Locate the elements you want to scrape and right click on them. There should be an option called “Inspect Element”, or something similar. This will open the Web Inspector and select the line in the HTML with the element you selected. Try to find a pattern in the elements' classes or structure. Let’s take lainchan again. Let’s say we only want to get the opening post bodies for each index page. All OPs have class ‘op’ and the body is inside a div with class ‘body’. Let’s translate that into code.

%w[uri net/http oga].each { |l| require l }

doc = Oga.parse_xml(Net::HTTP.get(URI(index 'cyb', 1)))

ops = doc.css('.op')

op_bodies = ops.map { |post| post.css('.body').text }

With just a few lines of code, we were able to easily get access to the information we needed. Wasn’t that easy?

A tale of bad code

Unfortunately for you, not all websites are as easy to scrape as lainchan. Sometimes you come across a huge tangled mess that might take you hours to unravel. Let me tell you a story about how not to write a single-page website.

dojin.co is a website that gathers download links to doujin music: Japanese indie music often inspired by videogames such as the Touhou series or Kancolle. It is amazingly heavy, for some reason; it is not rare for my browser tab to crash while I’m trying to search for something. One day I got tired of its bullshit and decided to write a scraper for it, and try to understand what was the cause of its abysmal performance.

Little did I know of the horrors lurking underneath.

My first guess was that the developer just did what everybody loves doing these days: throw a bunch of crudely combined JavaScript frameworks at it and call it a day. That’s understandable: the site appears to have only one developer, and he seems to enjoy web design more than programming. But as I open up the dev tools, I am greeted by a relatively short list of unorderly files with surprisingly little trace of framework-like drivel. Guess the code really is that bad.

The codebase is kind of a mess, but it doesn’t take me long to find the function called when scrolling down to load more albums. It makes a POST request to an ‘ajaxurl’ with some settings and an ‘arraySet’, which seems to indicate what to send.

‘ajaxurl’ turns out to be ‘/wp-admin/admin-ajax.php’. This tells me that it’s using wordpress underneath, and that the server side of the website is likely to be a huge mess of PHP. I open up a terminal and try curling the address:

$ curl -X POST http://dojin.co/wp-admin/admin-ajax.php 0

There doesn’t seem to be anything stopping me from doing it. Does this mean that the authentication is useless? Most likely. Let’s try curling with the parameters from ‘displayMore()':

$ curl -X POST \

http://dojin.co/wp-admin/adminajax.php?action=infiniteScrollingAction&postPer

Page=35&offset=0&arraySet=%5B34959%2C22539%2C16137%2C23916%2C34978%2C34982%2C34

$517%2C37926%2C1797%2C2453%2C2586%2C1462%2C11373%2C23913%2C34984%2C5716%2C6372%

2C7708%2C23910%2C2188%2C3441%2C17564%2C34980%2C37277%2C9111%5D

This returns a JSON file with the results in plain (malformed) HTML under the ‘data’ key. Why you would wrap that in a JSON object is beyond my comprehension, but hey, it works for him. After playing a bit with these parameters I figure out that the numbers in ‘arraySet’ are just album IDs. This is also a weird decision, but it sort of makes sense.

Later on, I find what seems to be the POST request invoked when searching: the payload is a hashmap containing some options and the search terms; the ‘artist’ and ‘style’ fields are represented by IDs. curling with those parameters returns the search results and an array with the album IDs. Bingo! Now all that’s left is figuring out where it’s getting the artist and genre IDs from.

I search the whole codebase for other AJAX requests, but nothing turns up. I try to run the profiler while searching and, while it does register an incredibly high call stack and a huge performance hit at various points, it surprisingly shows no signs of hitting the network. Baffled, I try using “Inspect Element” on one of the search suggestions, which is when the realization hits me like a bus.

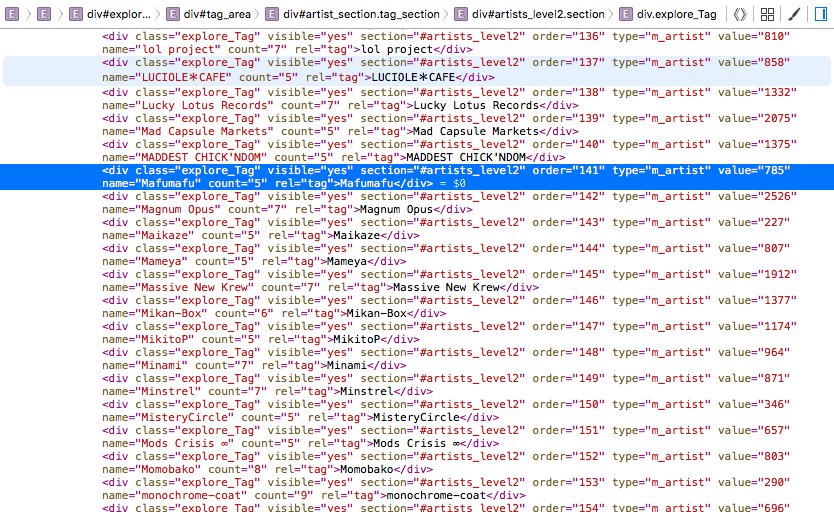

THEY WERE THERE ALL ALONG! All the artist and style IDs where all hidden in plain sight in the page HTML.

It takes me a while to recover from such a sight. I start writing the scraper, but the front page is so huge that nokogiri, the XML library I was using before, chokes on it, and I’m forced to switch to Oga. After fighting with XPath and encodings, I finally get a JSON file with all the artists and their respective IDs. It is 84 KB; given the verbosity of XML I estimate the source to be at least 700 KB, and I’m not far from the truth: the whole front page weighs a little short of 1 MB.

But why exactly is it so slow? Well, first of all, 1 MB of HTML is a lot to digest for a borderline toaster such as mine.

Second, this 1 MB document is being queried all the time. How do you suppose it shows the suggestions? Obviously it queries the whole thing for the elements with a name attribute that starts with the search term and changes their properties to make it so they appear in the suggestion list. Querying such a huge document takes time, especially since it has to run synchronously and thus hangs the page until it’s done. Now imagine this running each time you push a button on your keyboard.

Despite this madness, the scraper turned out to be pretty simple to write: I made it generate a nice HTML file with the results. It looks a lot like the website itself, but without all the crap.

And this, my friends, is the reason why a good API is the best feature of a website: it provides a standard interface that gives you the freedom to access its information the way you want to, unconstrained by the idiosyncrasies and failings of its UI.